(A)live check-in 1

I am very happy heeded my own words in the last post. Indeed, it pays to develop the visual ideas along with the audio ideas, and not tack on the video at the last stages. It has helped to rein in certain possibilities, namely, it has helped to solidify that this work ( (A)live ) will have a narrative-type form suggesting a life cycle (or several cycles), and perhaps end with the rising sun that washes everything out with its light - what is life but if not just a dream and at the end (as in the beginning), there is light?

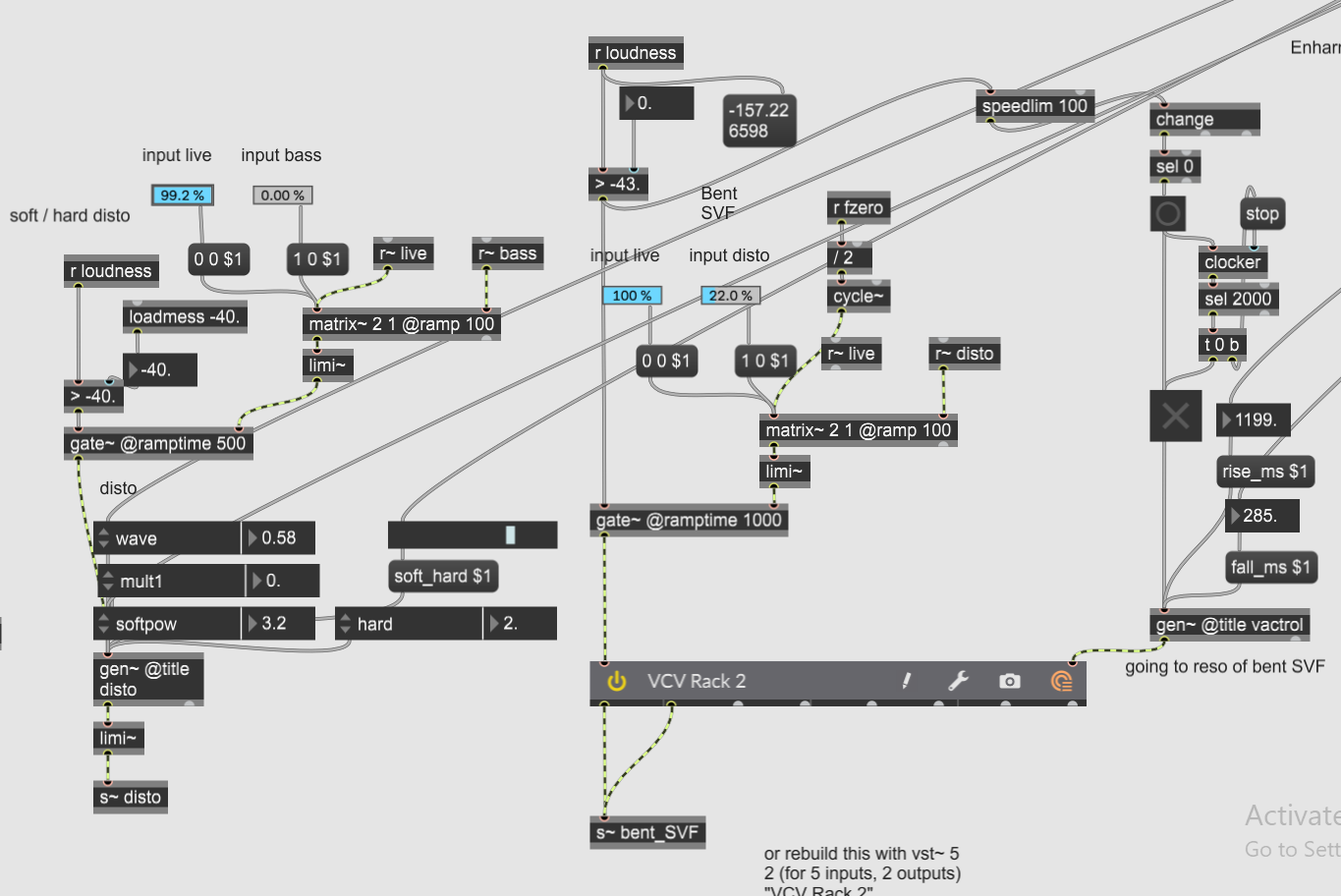

On a more mundane note, I have been highly distracted by the attempt to make a simulation of a faulty amp gate, and spend an inordinate amount of time just figuring out jitter jargon so I could find out how to interpolate between two video streams. Sequencer building has also been on the list of details that keep me from the big picture. I now have one (thanks to ideas from DK tools - still in beta) that will record rhythms and track your pitches or spectral data for either synthesis or corpus matching. Very efficient use of machine learning. Still don't know what sounds for the sequencer to choose. How (or if) these two ideas - feedback and sequencing - can be combined in the piece remains to be seen. I love the ideas of sending sequencing information to filter cutoffs to control feedback and things like that, but I am not sure this idea fits this piece. This sequencer is principally for creating streams of sounds that go in and out of sync - as in life.

I am still torn whether I should run some parallel (to Tölvera) simulations in jitter in order to interpolate between the two. For example, between a Tölvera being fading into a Lorenz attractor butterfly shape. Or whether I should dive into the python code of Tölvera again and find ways to make modulations/interpolations within the code and hopefully via osc. Not sure yet. ATM thinking of simple stuff like using my orbit.py and adding or subtracting flocking or slime behavior. Thinking this may be the more stable idea. Then I would only use jitter for brightness, color and other shading effects.

Until the next check-in!