New Blog

It's the year of the snake (2025), so one of my resolutions is to deepen my understanding of Python and Taichi (a domain-specific language embedded in Python). With these tools, I can run simulations of fluids, orbits, mechanics, and even organic behaviors such as flocking and cellular growth. Through these simulations, I can find streams of data that can modulate musical features. Some modulations can be for surface-level features such as stereo movement or loudness, other modulations can take place on the dsp level such as manipulating feedback, delays. They can even be worked into interactive machine learning to perform classification and regression on certain parameters such as choice of preset or choice of audio channel input. What I want to make clear about my experiments, compositions, installations, and research is this: All music, either recorded or live, is produced by humans. There is no AI generated content. The AI that I engage with is for creating networks for the manipulation of sound and visuals, such as DSP processing, stereo diffusion, or behavioral interactions or color and shape manipulations of visuals.

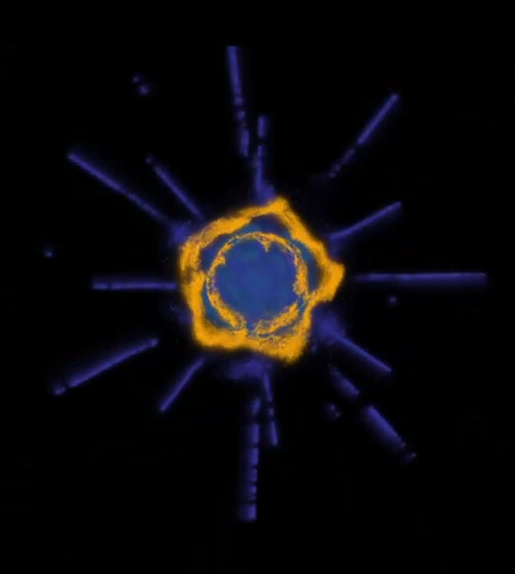

Modulating parameters is the "easy", fun part - but what is more challenging is to find an overall form for a composition. This is where I am also hoping that these physical and organic simulations can be an inspiration. They produce really cool visuals, I would like to think of them as short, abstract animations for which I am creating a sound-track. Or in the case of an organic simulation, I am encapsulating a life-cycle in sound and vision. Sound-track is not exactly the correct term though, because the audio and visuals should be interactive.

Making audio and visuals interactive is fairly easily done with OSC. My challenge has been to take parameters from a long code written in Python or Taichi and find ways to expose those parameters to OSC. More details about that in future posts.

The other goal of this blog is to explore the non-generative potential of AI in music. As I mentioned above, I am not interested in generating notes or scores with a musical incarnation of ChatGPT. All the notes in my music are generated by me or my human collaborator(s). However, AI can be used to produce networks, communicate, and build interactive systems. I am also not interested in creating self-improvising AI systems, but I think the study of these systems is very illuminating because we are asking a lot of the same questions and trying to solve some of the same problems, such as the use of musical corpera (large data containing audio, or folders of audio samples) and feature recognition.