Fluid Flutes

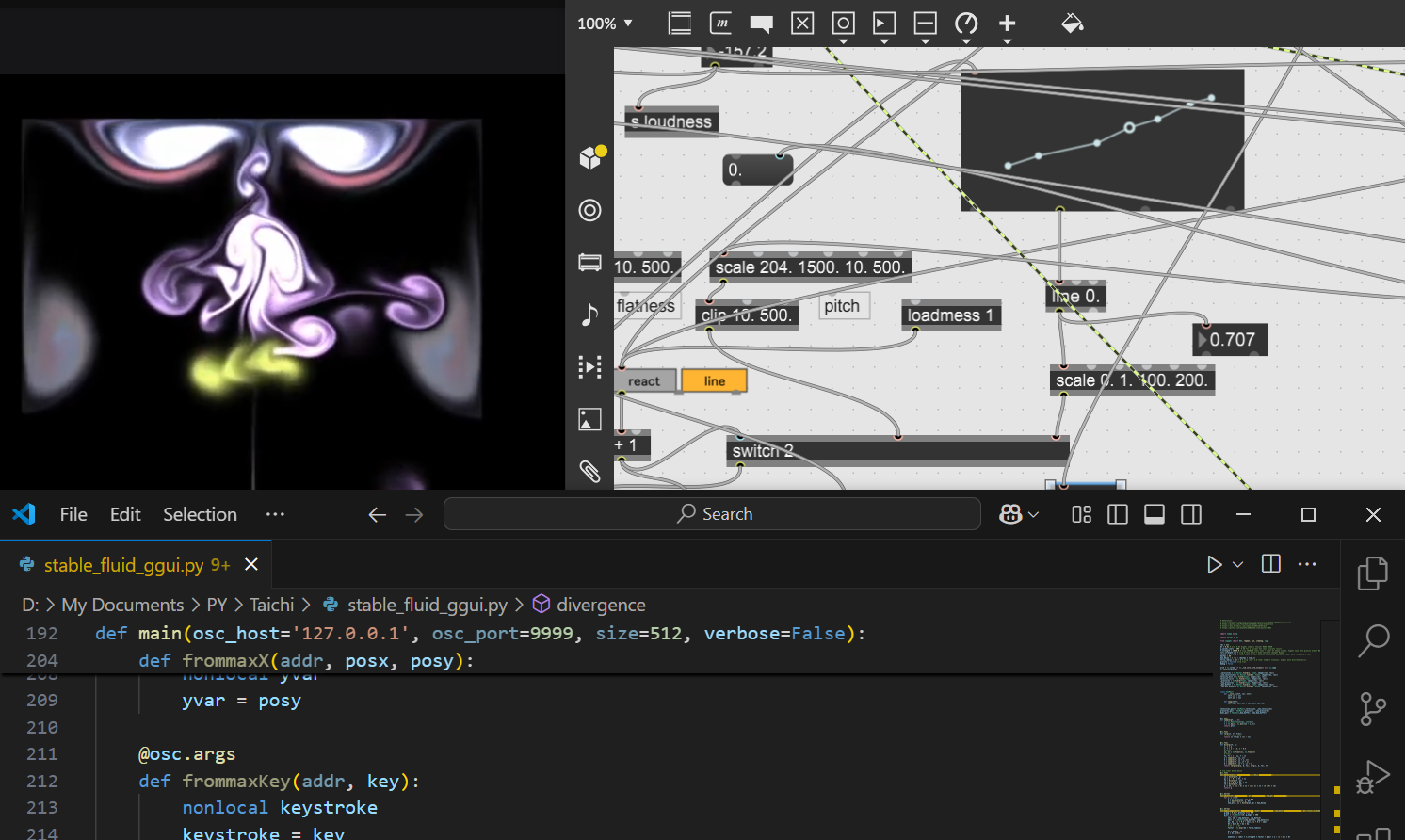

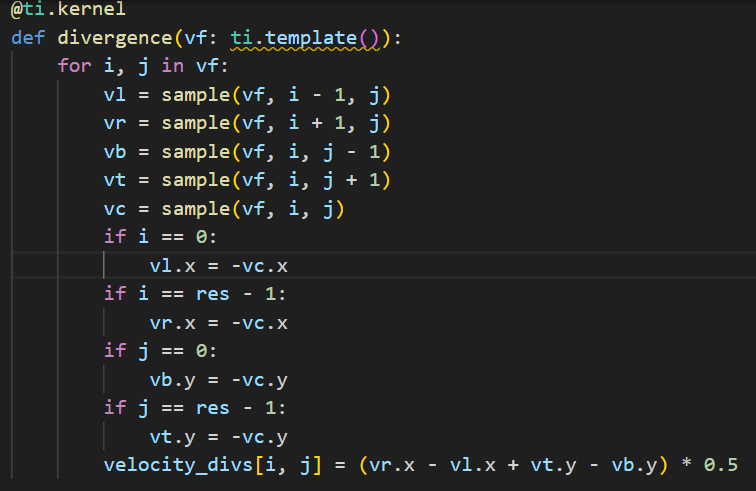

This is a recap of a stable fluid simulation that I ran with flute sounds. Using Taichi's ggui, this fluid simulation creates a beautiful, lava lamp type motion that offers several parameters that can be modulated. The original code can be found if you download Taichi and open their examples folder. In the ggui subfolder you can find stable_fluid_ggui.py. In order to set the fluid in motion, the code was set up to receive input from the computer keyboard and react to mouse movements. This was a massive bonus. I am not the cleverest coder, and my skills are quite lacking still, but with help, I was able to hack these controls to make OSC ends that replaced keyboard and mouse controls with audio reactive controls.

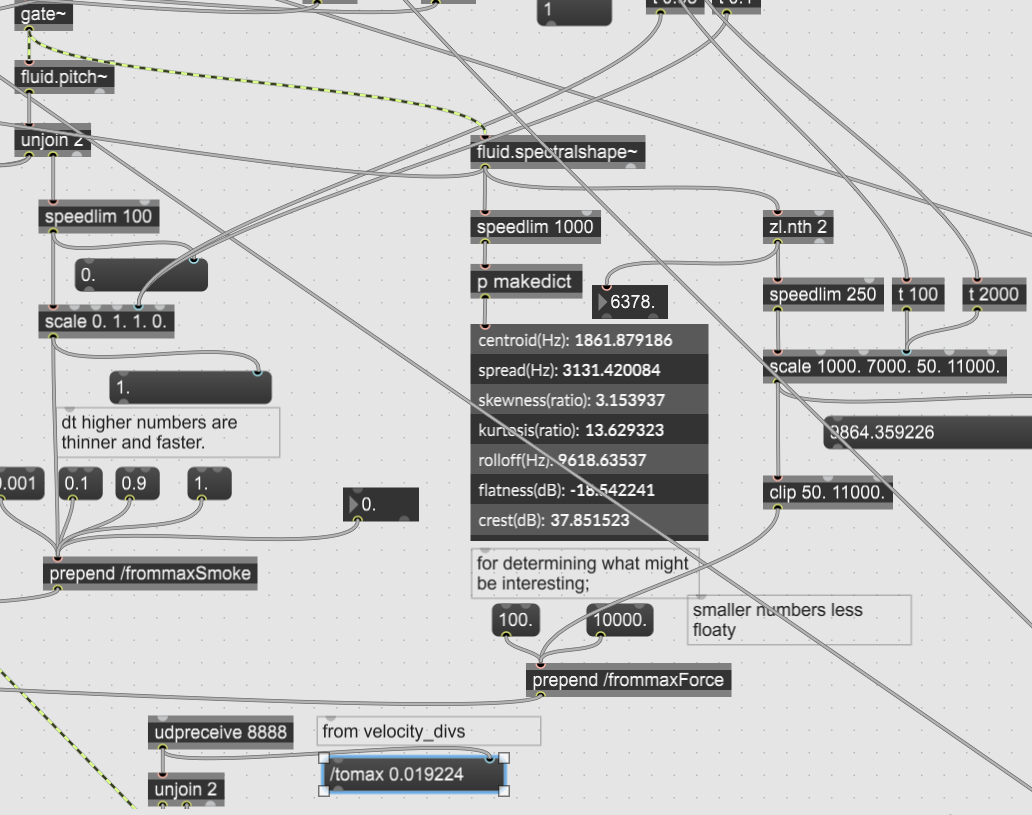

At the moment, the only useful numbers that I got out of the code were the velocity divergences, which sent out a pair of numbers that I could then scale to modulate musical parameters. For this experiment, I simply used it to pan the stereo field left and right, just to keep things simple for the moment. I need more study on how to extract other bits of the code to expose other useful variables. This for me is the big challenge.

It was far easier to get numbers into the code from Max msp via OSC, since as I mentioned, the code was already set up to receive data from the computer.

With the helpful analysis tools of the Flucoma package for Max, I was able to send data out that corresponded to my sounds. As an experiment, this was "easy", however, the challenge is to make a longer piece that doesn't always show the same correspondences - or to make these correspondences shift over time. To do this over the short period of the demo, I used a clocker in Max to trigger different thresholds at certain times, so the movements would at times only reflect the vertical axes, and at times both vertical and horizontal. At times, the vertical axis is reflected by the pitch. I left it like this but now I see the drawback is that it starts to look more like an analysis of the music - more along the lines of an animated spectrograph with high notes up top and low notes on the bottom. A little bit of that is ok, but that is also a behavior I need to shift from time to time.

It is also the question if I should use a clocker, or time in general as the shifter of parameters. There are other options such as setting up a neural network that will set x/y behavior according to what I am playing. That still creates 1 - 1 correspondences, albeit more complex Fortunately laptops and GPUs are good enough, I could get two networks running and interpolate between them, that would be one theoretical possibility.

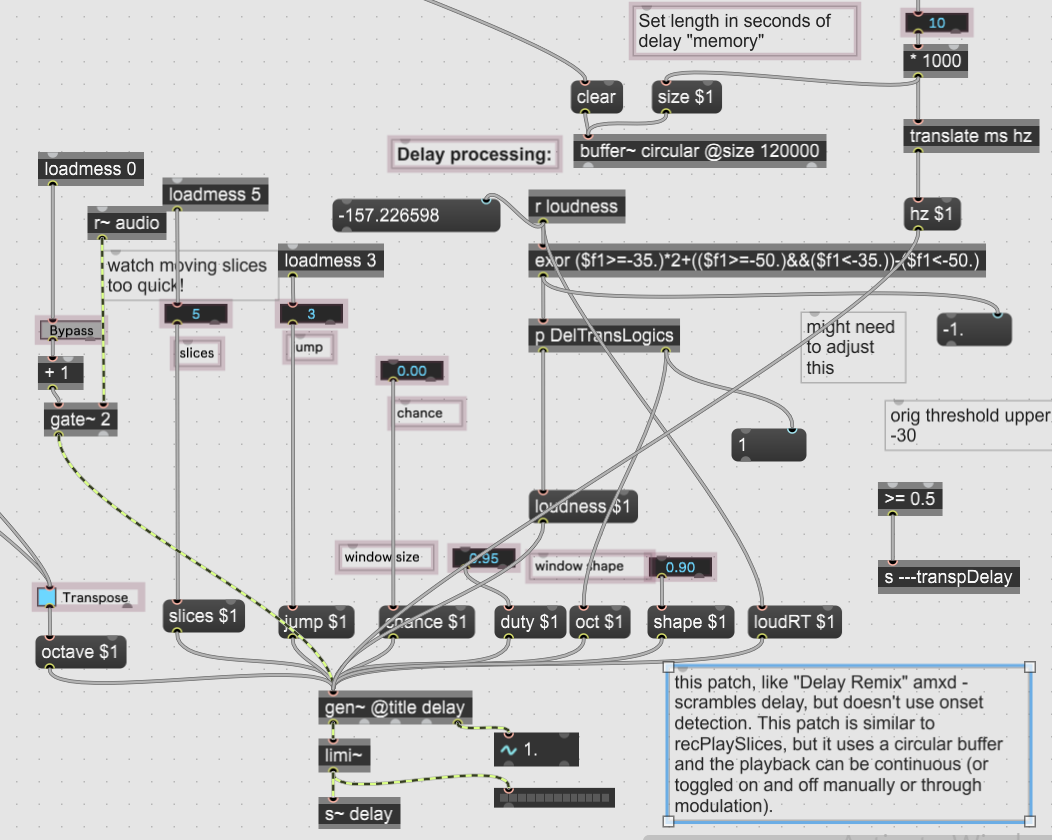

A quick note on the audio, the delay system was inspired by the examples in Generating Sound and Organizing Time by Taylor and Wakefield. The trick for me was to apply a circular buffer that could re-mix the delay without clicks, and to make a conditional transposing (in buffer terms, a faster or slower reading). In this case, I opted for the simple condition of loudness. I like the way the buffer is set up, it opens up possibilities to have a shorter or longer memory from which to do re-mixes, how long the re-mixed segments can be, and how much it can jump around from sample to sample.

The reverb is gen's freeverb, a bit on the metallic side because the fluid is obviously in a metal container, in my imagination. That could be adjusted as well.

My next goal is to bring these visuals into jitter - that is another entry though.