Orbital Life

So after getting into Tölvera, I decided to create one of my own moves. Tölvera has a number of "beings" that have their own way of moving in their A-Life environment, all composable, combinable such as flock, swarm, slime, etc. I created my own, not very original, based heavily on the particle-life model. Basically, I took that and added some rotation into the mix, therefore I called it an "orbital" creature.

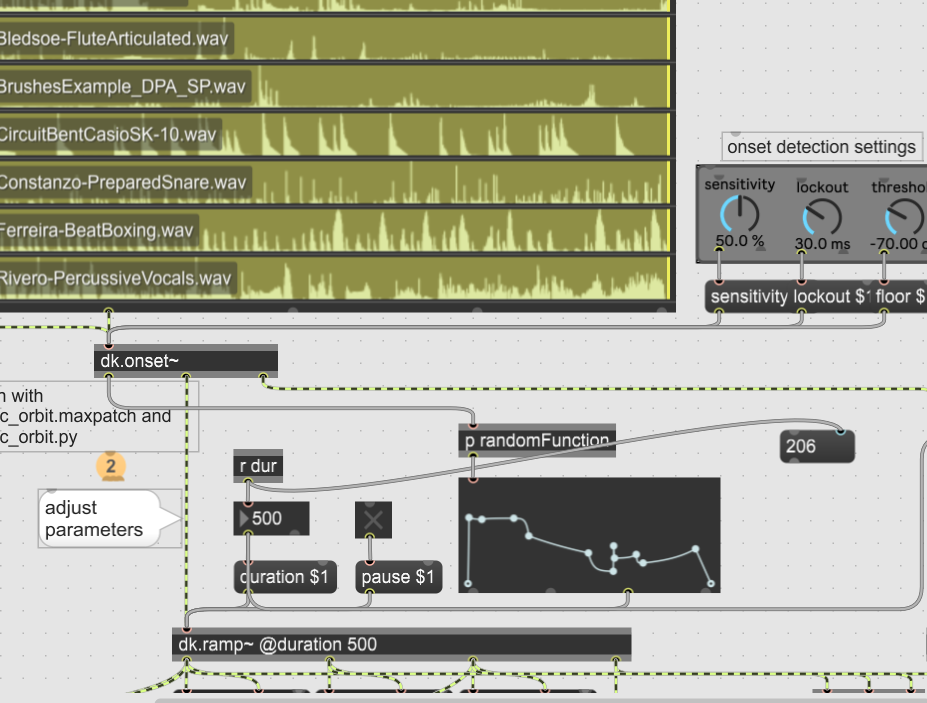

The idea is to use these movements to interact with sound. In this, my first try, I used my own flute sounds that trigger some whacky FM synth sounds. This nicely captures the chaotic setting I chose for the visuals, 3 orbital species with a 1000 particles. What's cool about these orbital creatures is that they easily fall into symbiosis (which isn't really apparent in the short clip - see this longer, silent clip for better examples of this behavior). Nevertheless, I wanted to create a symbiosis feedback. It goes thus: my sound (through a loudness threshold) triggers an envelope shape. The shape of that envelope modifies the strength and reach of the attraction of two species. The species then react, change their movement, and that speed determines the duration of that envelope.

So what's good, what's bad, and what do I want to do better? I like the fact that orbital creatures have their own rhythm. My sounds are at times punctuated and rhythmic. So the visual motion is not always boringly predictable. I think there is a decent amount of chaos mixed with calmer stuff, just like the sounds. Since there is already some independence, I could improve this by classifying my sounds through a neural network (I would use the Flucoma tools for this) and use that output to choose which two species to interact. I left that part out of my description - there was an element of "live" computer interaction. My flute playing is a recorded sample, so I had my hands free to "perform" which two species of the three would interact and have their parameters of attraction be modulated. Sometimes this had drastic effects. After a 20 minute session, I was able to figure out which pair of species suited which type of sounds. So setting up a neural network would put me out of business in this regard. But there are many other things to do.

Now that I have NDI set up, I can use two laptops which will free up my CPU and GPU and I can get jitter running in Max. That will open up more possibilities for the visuals and sounds to interact. There will always be something to do for a pair of actual human hands.

By the way, those FM Synth sounds are from a great new Max Package, Data Knot by Rodrigo Constanzo. I basically plugged one of his example patches into this mix!